Perhaps you are designing an embedded inference engine for edge computing. Or you are taking the next step in automotive vision processing. Or maybe you have an insight that can challenge Nvidia and Google in the data center.

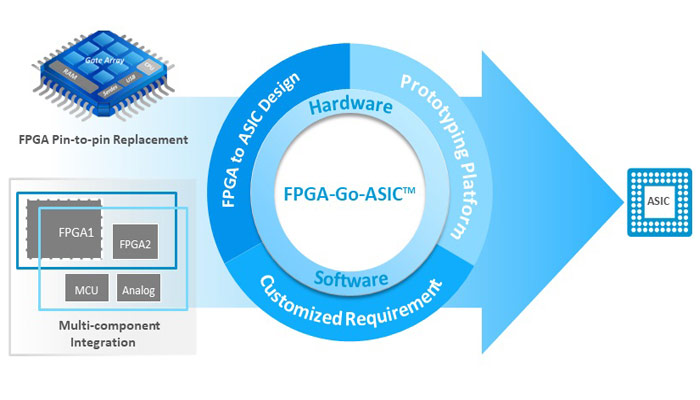

Across a wide range of performance needs, environments, and applications, AI accelerator architectures present unique challenges, not just in design but in verification and implementation as well. Moving from an architecture to an FPGA—almost a mandatory step in this space—and then on to a production ASIC is a non-trivial journey. But it doesn’t have to be an adventure if you plan ahead.

Pulled three ways:

If you choose—as most teams will—to do a proof-of-concept or a verification platform in FPGAs, you will, from the very start, be pulled in three directions at once, as shown in figure 1. The architects will want your FPGA implementation to stay as close as possible to their microarchitecture. The whole point—for them—is to see how efficiently the design implements their algorithms. However, the software team will push you to optimize the FPGA design for performance. That means bending the architecture to fit the strengths and limitations of the chosen FPGA chips. You can count on marketing to add to this pressure—mainly if their plans include an early market entry with the FPGAs. There is a risk that the proof of concept will take on a life of its own, like a creature of Frankenstein.